import numpy as np import matplotlib.pyplot as plt from keras.datasets import imdb from keras.preprocessing.text import Tokenizer from keras import models from keras import layers from keras.callbacks import EarlyStopping from sklearn.model_selection import train_test_split

先日の手書きデータです。

x_train = np.array([ [0,0,0,0,1,0,0,1,1,1,0,1,1,0,0,1,1,0,0,0,1,0,0,0,0], [0,0,0,0,1,0,0,0,1,1,0,0,1,1,0,0,1,1,0,0,0,1,0,0,0], [0,0,0,1,1,0,0,1,1,0,0,1,1,0,0,1,1,0,0,0,1,0,0,0,0], [0,0,0,0,0,0,0,0,0,1,0,0,0,1,1,0,1,1,1,0,1,1,0,0,0], [0,0,0,0,1,0,0,0,1,1,0,0,1,1,0,0,1,1,0,0,1,0,0,0,0], [0,0,0,0,0,0,0,0,0,1,0,0,0,1,1,0,0,1,1,0,0,1,0,0,0], [0,0,0,0,1,0,0,1,1,0,0,1,1,0,0,1,1,0,0,0,0,0,0,0,0], [0,0,0,0,1,0,0,1,1,0,1,1,0,0,0,0,0,0,0,0,0,0,0,0,0], [0,0,0,0,0,0,0,0,0,1,0,0,0,1,1,0,0,1,1,0,0,1,1,0,0], [0,0,0,0,0,0,0,1,1,1,0,1,1,0,0,1,1,0,0,0,1,0,0,0,0], [0,0,0,0,0,0,0,0,0,1,0,0,0,1,1,0,1,1,1,0,0,1,0,0,0], [0,0,0,1,1,0,0,0,1,0,0,0,1,1,0,0,0,1,0,0,0,1,0,0,0], [0,0,0,0,0,0,0,0,0,0,0,0,0,1,1,1,1,1,1,0,0,0,0,0,0], [0,0,0,0,0,0,0,0,1,1,0,1,1,1,0,1,1,0,0,0,1,0,0,0,0], [0,0,0,1,1,0,0,0,1,0,0,0,1,1,0,0,1,1,0,0,0,1,0,0,0], [0,0,0,0,0,1,1,0,0,0,0,1,1,0,0,0,0,1,1,0,0,0,0,0,1], [1,1,0,0,0,0,1,1,1,0,0,0,0,1,1,0,0,0,0,1,0,0,0,0,0], [0,0,0,0,0,0,0,0,0,0,1,1,1,0,0,0,0,1,1,0,0,0,0,1,1], [1,0,0,0,0,1,1,0,0,0,0,1,0,0,0,0,1,1,1,0,0,0,0,1,0], [0,0,0,0,0,1,1,0,0,0,0,1,1,1,1,0,0,0,0,0,0,0,0,0,0], [0,0,0,0,0,0,0,0,0,0,1,1,0,0,0,0,1,1,1,0,0,0,0,1,1], [1,1,0,0,0,0,1,0,0,0,0,1,1,1,0,0,0,0,1,0,0,0,0,1,0], [0,0,0,0,0,1,1,1,0,0,0,0,1,1,1,0,0,0,0,0,0,0,0,0,0], [1,0,0,0,0,1,1,0,0,0,0,1,0,0,0,0,1,1,1,0,0,0,0,1,0], [0,0,0,0,0,1,1,0,0,0,0,0,1,1,0,0,0,0,1,1,0,0,0,0,0], [0,0,1,0,0,0,0,1,0,0,0,0,1,1,1,0,0,0,0,1,0,0,0,0,1], [0,1,0,0,0,0,1,1,0,0,0,0,1,1,1,0,0,0,0,0,0,0,0,0,0], [0,0,0,0,0,1,1,0,0,0,0,1,1,0,0,0,0,1,1,0,0,0,0,1,0], [0,1,0,0,0,0,1,1,0,0,0,0,1,1,1,0,0,0,0,1,0,0,0,0,0], [1,0,0,0,0,1,1,1,0,0,0,0,1,0,0,0,0,1,1,0,0,0,0,0,1], ])

右上から左下への線は「1」

左上から右下への線は「0」としようと思います。

y_train = np.array([1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,]) x_train, x_val, y_train, y_val = train_test_split(x_train, y_train, test_size=0.2, random_state=0) print(x_train.shape, x_val.shape, y_train.shape, y_val.shape)

(24, 25) (6, 25) (24,) (6,)

model = models.Sequential() model.add(layers.Dense(1, activation='sigmoid', input_shape=(25,))) model.summary()

Model: "sequential_13"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_27 (Dense) (None, 1) 26

=================================================================

Total params: 26

Trainable params: 26

Non-trainable params: 0

_________________________________________________________________

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

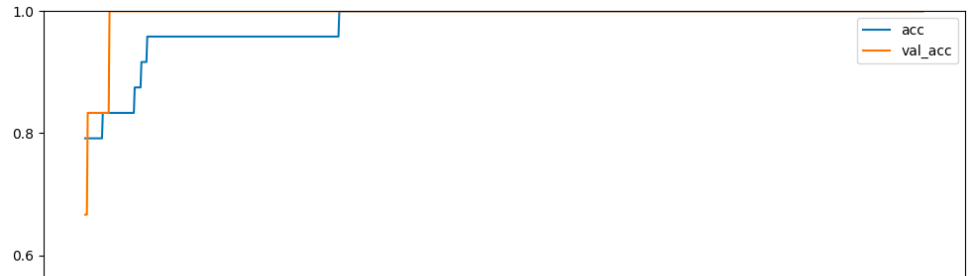

100回くらいでしたら、いまいちでしたので、

正しいのか分かりませんが、1000回で。

history = model.fit(x=x_train,

y=y_train,

epochs=1000,

verbose=0,

validation_data=(x_val, y_val))

history_dict = history.history

acc = history_dict['accuracy']

val_acc = history_dict['val_accuracy']

epochs = range(1, len(acc) + 1)

plt.figure(figsize=(12, 8))

plt.plot(epochs, acc, label='acc')

plt.plot(epochs, val_acc, label='val_acc')

plt.ylim((0, 1))

plt.legend(loc='best')

plt.show()

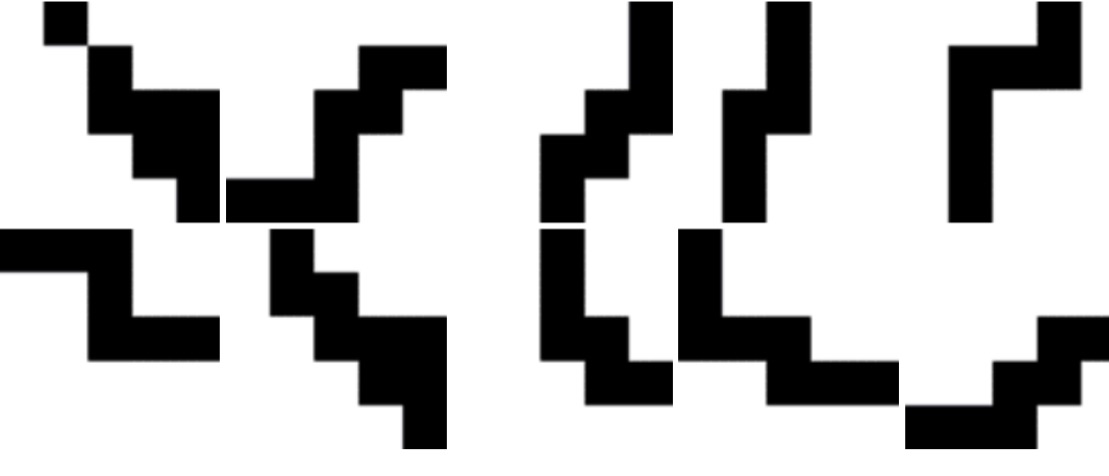

できたモデルに、追加データで予測。

test = np.array([ [0,0,0,0,1,0,0,0,0,1,0,0,1,1,1,0,1,1,0,0,0,1,0,0,0], [0,0,0,0,0,0,0,0,0,0,0,0,0,1,1,0,0,1,1,0,1,1,1,0,0], [0,0,0,0,1,0,0,0,0,1,0,0,0,1,1,0,0,1,1,0,0,0,1,0,0], [0,0,0,0,0,0,1,1,1,1,0,1,0,0,0,1,1,0,0,0,0,0,0,0,0], [0,0,0,0,0,0,0,1,1,1,1,1,1,0,0,0,0,0,0,0,0,0,0,0,0], [1,0,0,0,0,1,0,0,0,0,1,1,1,0,0,0,0,1,0,0,0,0,1,0,0], [1,1,1,0,0,0,0,1,0,0,0,0,1,1,0,0,0,0,1,0,0,0,0,1,0], [0,0,0,0,0,0,0,0,0,0,1,1,1,0,0,0,0,1,1,0,0,0,0,1,0], [0,0,0,0,0,1,1,0,0,0,0,1,1,0,0,0,0,1,1,0,0,0,1,1,1], [0,0,0,0,0,1,0,0,0,0,0,1,1,0,0,0,0,1,1,0,0,0,1,1,1]])

やばい、すご過ぎる。

predictions = model.predict(test)

print(predictions)

[[0.92442065]

[0.90009964]

[0.9115938 ]

[0.9031062 ]

[0.38198504]

[0.12939417]

[0.00990942]

[0.06089722]

[0.03855555]

[0.05584331]]

これが重みか〜。手動で計算できるかな?

確かに右上から左下にかけて、

大きな値になってるなー。すご。

print(len(l0.get_weights())) print(l0.get_weights()[0]) print(l0.get_weights()[1])

2

[[-0.9030642 ]

[-0.58427167]

[-0.9232676 ]

[ 0.93159467]

[ 0.9419235 ]

[-0.50364554]

[-0.38859457]

[ 0.14480409]

[ 1.0228486 ]

[ 0.39397684]

[-0.27923834]

[-0.7368595 ]

[-1.126273 ]

[ 0.32510123]

[-0.38320494]

[ 0.98702824]

[ 0.7094111 ]

[ 0.9439723 ]

[-0.5872988 ]

[-0.4665192 ]

[ 0.60151327]

[ 0.5994672 ]

[ 0.59917206]

[-1.0496505 ]

[-0.46676916]]

[0.09961005]